Fast Fashion Counts on AI and ML

In the world of fast fashion, clothing styles are designed, produced, and retailed within a matter of weeks. In this lightning-quick industry, time is money; and any setbacks in the manufacturing process can result in lost profits. This means garment production must result in not just precise color matching, flawless fabric, and consistent sizing, but faster order-to-ship times as well.

Automated quality inspection—enabled by computer vision (CV) and machine learning (ML) technologies—helps apparel suppliers offset delays in the fabrication process and increase transparency for all stakeholders.

Take the Dianshi Clothing Company, for example. The textile manufacturer has implemented deep-learning algorithms on the production line of its plant in Hangzhou, China, to count the number of garments being produced and check each for surface defects. To integrate these capabilities with its existing manufacturing execution system (MES), Dianshi partnered with manufacturing automation supplier Kinco and AI expert Aotu.ai on a smart-vision system that increased productivity, efficiency, and reduced costs.

The solution allows the company to easily keep count of the number of finished garments by SKU. The automated counting system provides remarkable flexibility by providing the ability to switch any given SKU being worked on without manual input (Video 1).

“Let’s say you order a small batch of garments from an online wholesale store like Alibaba,” says Aotu.ai CTO and co-founder Alex Thiel. “The idea is that you can see a dashboard with exactly how many garments have been built, all the way down to the factory level. And from the factory’s perspective, this allows it to easily switch what’s being worked on, several times a day, without any workflow changes.”

Open-Platform Machine Learning

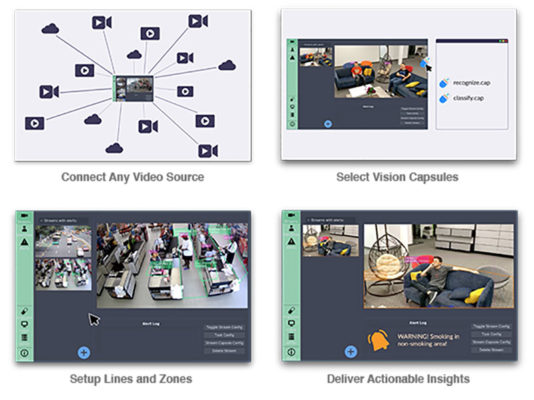

At the heart of the solution is the BrainFrame platform, which transforms any connected video camera into a continuously monitoring smart-vision camera. The platform simplifies computer vision development by providing an easy-to-use GUI for configuration, drag-and-drop Vision Capsules available in an app-store-like repository, and the ability to scale the hardware horizontally to support hundreds of video streams simultaneously (Figure 1).

The OpenVisionCapsules open-source packaging format—which includes all the code, files, and metadata to describe and implement an ML algorithm—requires less developer domain expertise. “Our system is built to be easy to use and enable scientists to build robust production systems without much knowledge about what that entails,” says Thiel.

BrainFrame optimizes vision algorithms while requiring a negligible amount of memory itself. To accomplish this, the platform integrates the Intel® OpenVINO™ Toolkit and comes pre-optimized to work with Intel® processors.

According to Thiel, the neural-network optimization environment lowers deployment costs and enhances the ability to customize computer vision designs. “Using OpenVINO through BrainFrame is faster and reduces, if not eliminates, writing more code. That’s because the VisionCapsule library has so much of the code you’re going to need.”

The clothing counting and recognition in garment production use case required video input be fed into a counting algorithm and subsequently into a business logic capsule. To accomplish this without the time and effort of developing a completely custom algorithm, the BrainFrame platform wraps an OpenVINO image retrieval module into a VisionCapsule, enabling Aotu.ai’s identity control APIs to work on clothing images.

By enabling multiple models to work together, the result is simplified, scalable, and cost-effective computer vision development and deployment.

“Deployment at the garment production factory consisted of simply dragging and dropping a selection of VisionCapsules,” says Thiel. “There was no need to write custom code. What they had been working on for six months, we deployed in about two weeks.”

With automated quality inspection handled by CV, operators at the garment production facility can catch costly defects and production errors much more efficiently than with the human eye. @insightdottech

App-Store Model for Computer Vision Model Development

Development of the BrainFrame platform came about after Aotu.ai went through the process of building CV solutions for multiple customers. Each needed slightly different applications and models, making development and deployment time-consuming and expensive.

One reason the BrainFrame approach is so fast and simple is that it can be likened to that of an app store. Using the platform, developers are offered a menu of algorithms and other components. Each can be accessed much like downloading an app, and these apps work together to create the desired functionality. For example, to create a vision-based fruit identification system, an image recognition model focused on identifying shapes can be combined with another optimized to detect colors.

The BrainFrame platform is available in an Edge AI Developers Kit for manufacturers, OEMs, systems integrators, and developers. The kit, a result of a collaboration between Aotu.ai and AAEON, includes an UPX-Edge fanless industrial computer powered by an 8th generation Intel® Core™ i5 processor and two Intel® Movidius™ Myriad™ X VPUs, and comes pre-installed with the Aotu.ai BrainFrame platform.

Multi-tenant support even allows multiple users to access the toolkit simultaneously. “Developers can work on different models and upload them asynchronously, while an SI is connecting APIs to systems in the factory, and an end user is altering parameters on the GUI,” says Thiel.

Fast Fashion, Faster Development

With automated quality inspection handled by computer vision, operators at the garment production facility can catch costly defects and production errors much more efficiently than with the human eye. What’s more, the CV system is able to provide real-time data to the factory’s MES via Intel® Edge Insights for Industrial software.

Using an OS- and protocol-agnostic microservices architecture, Edge Insights for Industrial captures data from endpoints like the smart-vision system and allows it to be analyzed in real time at the edge. Therefore, any issues detected by the computer vision system can be synched with the MES to identify problems like equipment malfunctions that could result in production defects and delay the ever-important time to market.

For retailers looking to produce new garments at a breakneck pace, that’s more than just a fashion statement.