5G Workloads Demand More Horsepower

The advantages of 5G networks are well documented. Compared to 4G, users can expect 100x faster download speeds, 10x lower latency, and support for 500x devices in the same geographic space. These enhancements are expected to help usher in a wave of new applications, from autonomous vehicles and delivery drones to augmented reality and 4K/8K video streaming.

But what’s not as well understood is exactly how 5G infrastructure will be implemented to support these new applications at scale. And what underlying technologies will be used to support the massive amount of data that follows this uptick in performance.

It all starts with the network processors (NPUs) in 5G small cells, which must have the headroom to support a significant increase in data usage over the next decade. With the assistance of innovative design techniques, you can start designing such NPUs now.

Small 5G Cells Need Big Changes

Small cells are low-power radio access points that extend the core cellular network to end users. They have been a mainstay of 4G networks, but what is unique is the increased number of devices and data traffic—as much as a 4x per user according to one Ericsson study—in 5G means that small-cell network processors will have to work overtime.

Small cells include an FPGA for baseband processing, NPUs that handle Ethernet backhaul to the core network, CPU cores for scheduling, a modem, and associated memory and I/O.

Knowing that growing 5G workloads demand more horsepower, one leading cellular carrier began investigating the use of Intel® Atom® processors in a 5G small-cell proof of concept (PoC). While these processors provide more computational throughput, they also require a different architectural implementation. To accomplish this, the carrier enlisted DFI Inc., a worldwide supplier of high-performance computing technology.

Even more than speed, 5G is about capacity.

5G Small Cells: It’s All About the Timing

Aside from advantages on the performance front, one notable aspect of Intel® processors in high-speed data processing is the time required to identify interrupts. As shown in Figure 1, the FPGA baseband processor sends 80 ns pulse widths to the Intel processor-based NPU and a separate 5G module when it receives an RF signal. The processor on the other hand, needs a minimum of 117 nanoseconds to identify general purpose IO (GPIO) interrupts. And, after that, the Intel SoC experiences an interrupt latency before the first operations begin.

Why does this matter? Well, network communication is all about timing.

“Each device needs to be synchronized in time to make sure all of the data and actions are the same,” Ethan Wong, DFI Director of Product Management, explains. “When the FPGA sends a signal to both the 5G module and NPU, there’s a GPIO interrupt that happens. The interrupt latency is the time from receiving the signal until the device begins its first action.”

When the processor receives this signal, it needs to wait at least 20 to 25 µs to start the first action, but this is too long. This is because the 5G specification breaks process cycles into “slots,” which in the case of this 5G small-cell PoC is defined at 125 µs.

“With only 125 µs, and a latency of 25 µs, you only have 100 µs to finish all of the processing and communications in one slot,” says Wong.

Bending Time

To overcome this timing issue, DFI implemented a couple of standard and non-standard solutions.

“We implemented a hardware circuit that extended the 80 ns pulse width to 200 ns so we could detect the signal,” Wong says. “And on the software side, we worked on minimizing the interrupt latency by using a different low-latency Linux kernel, which reduced latency from sometimes 40 µs to 20 µs.”

DFI also optimized the PCIe connection between the NPU and 5G module to enhance overall system performance. But that still leaves slightly under 100 µs for processing and communication within the 125 µx time slot.

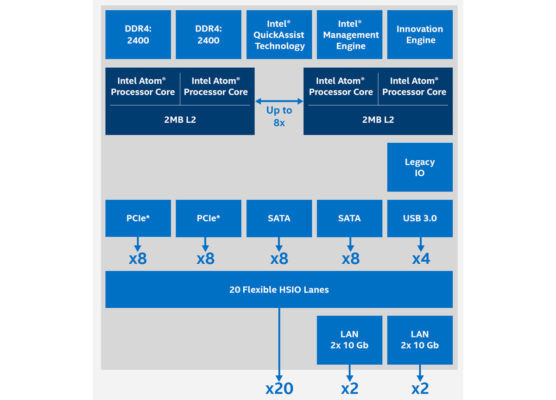

While the carrier selected the 16-core C3000 processor for its low cost and power consumption, the SoC delivers more than enough compute performance and data throughput over its 10 GbE ports to make up for lost time (Figure 2).

Scaling 5G for the Future

Even more than speed, 5G is about capacity. As we consume more high-bandwidth data on mobile devices, and more everyday objects join the IoT, networks will have to expand to support them.

From a CAPEX standpoint, the best course of action is to design systems with that in mind. NPUs like the 16-core C3000 processor provide enough performance for today’s workloads, as well as headroom to accommodate growing amounts of data traffic in the future. And network hardware specialists like DFI continue to advance these solutions by optimizing PCIe signals, tweaking Linux software stacks, and the like.

5G small-cell networks are already being deployed on every continent. If you’re looking to scale into the future, the time is now.