Moving Machine Vision from Inspection to Prevention

Fifty percent of a modern car’s material volume is plastic. And the vast majority of that—from oil pans to bumpers to dashboards—are fabricated through a process called plastic injection molding.

Just as it sounds, plastic injection molding machinery inserts molten plastic into a rigid mold, where it is allowed to set. The setting process can take anywhere from hours to days. Quality checks usually happen at the end of the production line, where inspectors manually deconstruct samples from each batch to look for defects.

“They’re taking two or more parts per shift off the line, destructively testing them, and making a call on whether the parts that were produced that shift were good or bad,” explains Scott Everett, Co-Founder and CEO of machine vision solutions company Eigen Innovations. “It takes basically an entire day just to get through a couple of tests because they’re so labor-intensive and then you only end up with measurements for two out of thousands of products.”

At first glance, this seems like an application where machine vision cameras could make quick work of an outdated practice. But while the concepts behind plastic injection molding are relatively simple, it’s a complex process. For example, injection molds are susceptible to physical variations in raw materials, temperature and humidity changes in the production environment, and slight operational inconsistencies in the manufacturing equipment itself.

The goal isn’t just to identify that a part is defective, but to provide useful quality analytics about the root cause of defects before hours of bad parts are produced. By monitoring every fabricated part, you can start to predict when the process is at risk of producing defective batches. But the number of variables in play makes this difficult for machine vision cameras unless the information they produce can be contextualized and then analyzed in real time using visual AI.

Beyond the Lens of Machine Vision Quality Inspection

Like all applications of visual AI, developing an ML video analysis algorithm starts with capturing data, labeling it, and training a model. On the plus side, there’s no shortage of vision and process data available during the production of complex parts. On the downside, the mountain of data that’s generated can contribute to the problem of identifying what exactly is causing a manufacturing defect in the first place.

Therefore, an ML video analysis solution used for predictive analytics in complex manufacturing environments must normalize variables as much as possible. This means visual AI algorithms need information about the desired product outcome as well as the operating characteristics of manufacturing equipment, which would provide a reference from which to analyze parts for defects and anomalies.

Eigen Innovations’ industrial software platform captures both raw image data from thermal and optical cameras and processes data from PLCs connected to fabrication machines. This data is combined to create traceable, virtual profiles of the part being fabricated.

@EigenInnovation’s machine vision #software platform is already paying dividends at major automotive #manufacturers and suppliers worldwide where it’s saving time, cost, and reducing waste. via @insightdottech

Then, during the manufacturing process, AI models are generated based on these profiles and used to inspect parts for defects caused by certain conditions. But because the platform is connected to the manufacturing equipment’s control system, visual inferences can be correlated with operating conditions like the speed or temperature of machinery that may be causing the defects in the first place.

“We can correlate the variations we see in the image around quality to the processing conditions that are happening on the machine,” Everett says. “That gives us the predictive capacity to say, ‘Hey, we’re starting to see a trend that is going to lead to warp, so you need to adjust your coolant temperature or the temperature of your material.’”

Inside the Eye of Predictive Machine Vision

While Eigen’s industrial software platform is an edge-to-cloud solution, it relies heavily on endpoint data so most of the initial inferencing and analysis occurs in an industrial gateway computing device on the factory floor.

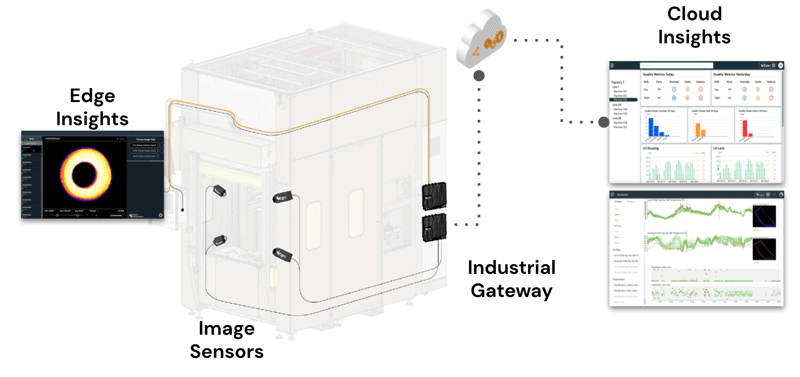

The industrial gateway aggregates image and process data before pushing it to an interactive edge human-machine interface, which issues real-time alerts and lets quality engineers label data and events so algorithms can be optimized over time. The gateway also routes data to the cloud environment for further monitoring, analysis, and model training (Figure 1).

Eigen’s machine vision software platform integrates these components and ties in industry-standard cameras and PLCs using open APIs. But the key to allowing AI algorithms and their data to flow across all this infrastructure is the Intel® Distribution of OpenVINO™ toolkit, a software suite that optimizes models created in various development frameworks for execution on a variety of hardware types in edge, fog, or cloud environments.

“From day one we’ve deployed edge devices using Intel chipsets and that’s where we leverage OpenVINO for performance boosts and flexibility. That’s the workhorse of capturing the data, running the models, and pushing everything up to our cloud platform,” Everett says. “We don’t have to worry about performance anymore because OpenVINO handles the portability of models across chipsets.”

“That gives us the capacity to do really long-range analysis on hundreds of thousands of parts and create models off of those types of trends,” he adds.

The Good, the Bad, and the Scrapped

Eigen Innovations’ machine vision software platform is already paying dividends in manufacturing environments at major automotive manufacturers and suppliers worldwide where it’s saving time, cost, and reducing waste.

Rather than producing batches of injection-molded car parts only to discover later that they don’t meet quality standards, Eigen customers are alerted of anomalies during the fabrication process and can take action to prevent defective parts from being created. And it eliminates the time and material scrapped during destructive quality testing.

“Our typical payback per machine can be hundreds of thousands, if not millions, of dollars on really large machines where downtime and the cost of quality stacks up very quickly,” Everett says. “And it’s as much about providing certainty of every good part as it is detecting the bad parts.”

“We’re approaching a world where shipping a part with insufficient data to prove that it’s good is really just as bad as shipping a bad part because of the risk factor,” he adds.

This article was edited by Christina Cardoza, Associate Editorial Director for insight.tech.